In a country riven by intense political polarization, who can we trust to help us get dependable answers to vital questions that are central to our civic life?

Ultimately—in a digital world besieged by “fake news,” “alternative truths,” doctored videos, phony websites, powerful algorithms, Russian trolls, and relentless bots—what is the future of truth?

The future of truth? It’s a big question, perhaps the central one of our world circa 2019. The Pew Research Center’s internet and technology project has set out to try to answer it by exploring questions about online knowledge, opinion, trust, and, yes, truth that are fundamental to the future of democracy.

These issues stand at the forefront of a national discussion at a time when many people either scrutinize news and information sources for signs of bias or dismiss entirely the notion that there are any believable sources at all. When dictionary.com chooses “misinformation” as its word of the year, as it did for 2018, and the Oxford Dictionaries chooses “post-truth” as the word of the year, as it did in 2016, sorting out how the internet and its users will be able to determine fact from fiction has never been more timely.

“The people who created the internet, particularly the popular commercial internet, had nothing but good hopes that it would be a net benefit to society in almost every possible way,” says Lee Rainie, who directs the center’s internet and technology research. “Now, we are in a different place. The narrative has shifted dramatically in the last two years, and people are writing pieces asking ‘Is the Internet a Failed State?’ and ‘Is the Internet Going to Kill Us All?’”

Pew and its partner, the Imagining the Internet Center at Elon University in North Carolina, have been studying the likely future impact of digital technology on society for more than 14 years. They’ve generated more than 30 reports and amassed a database of some 10,000 experts, including some of the world’s leading technologists, scholars, social media practitioners, visionaries, and strategic thinkers for a series of “Future of the Internet” reports.

Over the years, in multiple Pew canvassings, many experts have expressed anxiety about the way people’s online activities were undermining truth, fomenting distrust, widening social divisions, and jeopardizing society’s health and well-being. For its 2017-18 research, the center and Elon asked these same experts whether society would be able to combat the spread of lies and misinformation. “We asked this question,” says Rainie, “because it seemed the most urgent issue around the globe.”

Specifically, the question was: “In the next 10 years, will trusted methods emerge to block false narratives and allow the most accurate information to prevail in the overall information ecosystem? Or will the quality and veracity of information online deteriorate due to the spread of unreliable, sometimes even dangerous, socially destabilizing ideas?”

The answers were collated in one of the latest in the series of reports, “The Future of Truth and Misinformation Online,” which is based primarily on a nonscientific canvassing that drew 1,116 expert respondents. The replies showed a stark division of opinion: 51 percent of these experts predicted the information environment would not improve, while 49 percent said they believed that it would.

In categorizing the results, the research team identified these broad themes and subthemes:

The 51 percent with a negative outlook for the future believe that bad actors will inevitably thwart efforts to seek truth. According to these respondents, corporate and political interests profit from sensationalism and turmoil that generate viral web traffic, and will continue to use social media to appeal to the baser side of human nature: selfish, tribal, gullible, and greedy information consumers who will believe and buy whatever they are told to. This line of thinking holds that advancing technology will only make things worse, because the human brain is not wired to contend with powerful forces generating unprecedented amounts of information that is then sorted by algorithms and artificial intelligence to bombard readers with so much information—much of it confusing—that they give up on even trying to find the truth.

The 49 percent with an optimistic view for the next decade believe, conversely, that our better human instincts will lead society to come together to fix problems and seek the truth. Lies and misinformation have always polluted public discourse, these respondents say, but smart, dedicated people historically have found ways to isolate those with bad intentions. Technology will help people become more aware and adept at seeking and finding what’s real, the argument goes, because the rising speed, reach, and efficiencies of the internet, apps, and social media platforms can be harnessed to better detect and combat falsehoods. Legal and regulatory remedies—already being adopted in Western Europe—could create liability for people who intentionally spread inaccuracies; impose new restrictions on Facebook, Twitter, Google, and other major platforms; and curb the damage done by anonymous and fake purveyors of hate speech and misinformation.

Within this disparity of opinion, the optimists and pessimists agree that this is a watershed moment for grappling with a serious threat to democratic institutions, with one expert calling our era a “nuclear winter of misinformation.” They also agree that technology alone cannot unscramble the chaos that it has helped to create. Instead, they believe that the public must act to support quality journalism, and develop, fund, and support the production of objective, accurate information, and that society must elevate “information literacy” to be a primary goal for all levels of education.

Rainie’s co-author Janna Anderson, an Elon professor of communications who has helped oversee the “Future of the Internet” reports, says that over the years, many internet experts have passionately described what she characterizes as an existential battle between the uplifting promise of unparalleled human connectivity and the unprecedented ability to mislead and manipulate the public to amass economic and political power. “Many brilliant experts believe there will be no effective solution to the problems of the digital age until people in all realms of influence begin to work together to turn the tide,” Anderson says.

A recent report highlighted what’s at stake. “The Fate of Online Trust in the Next Decade” explored how the public views facts and trust in a democracy. The researchers again queried their panel of experts, who raised serious questions about the danger to the nation when trust—a social, economic, and political binding agent—is seriously eroded because lies and misinformation have undermined public confidence in the idea that truth is even knowable.

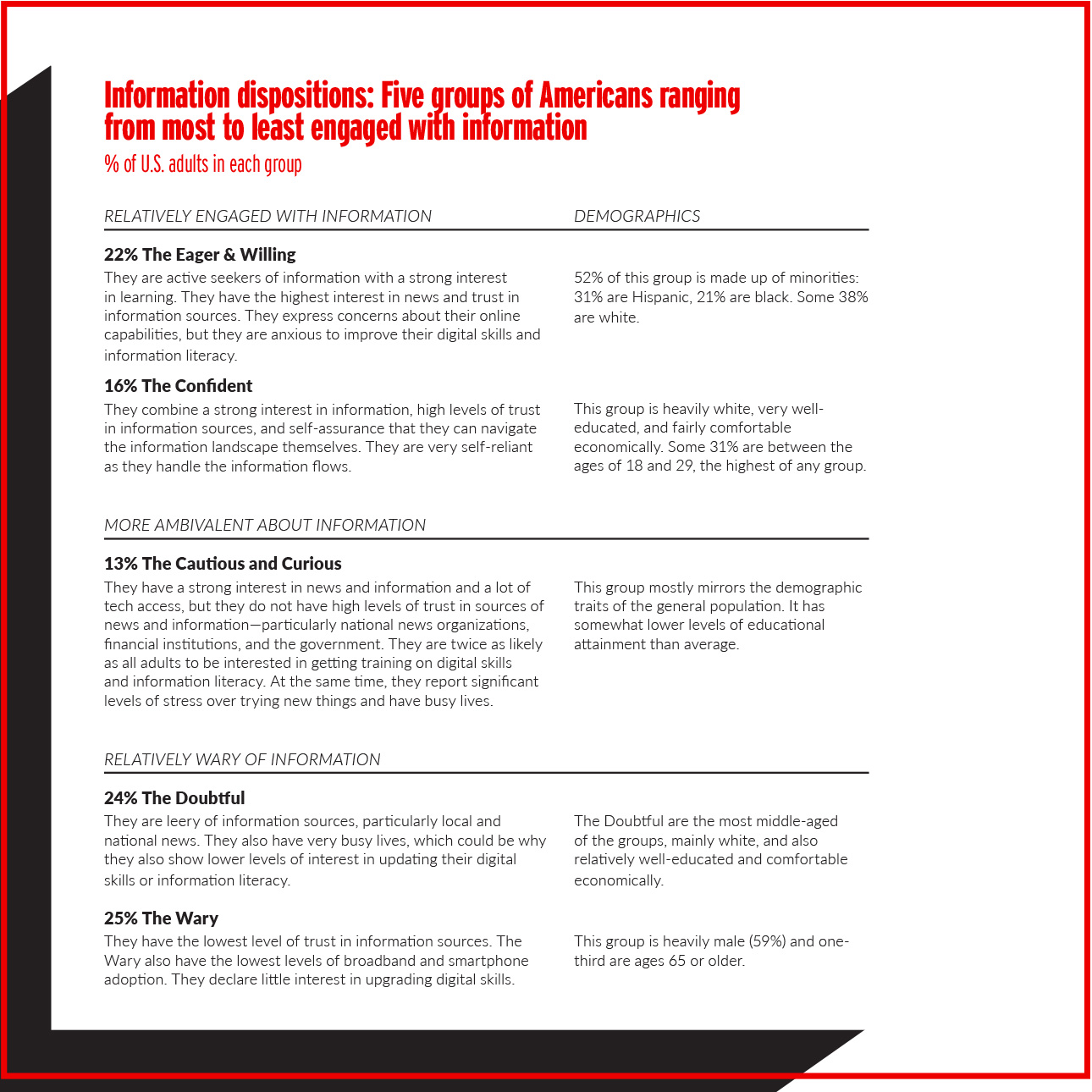

Another report, “How People Approach Facts and Information” (see page 26), based on findings from a nationally representative survey of American adults, revealed a public with markedly ambivalent attitudes about facts. The report grouped the U.S. population into five “information-engagement typologies,” depending on their level of interest, engagement, or disengagement with news and information. While 38 percent of adults have relatively strong interest and trust in seeking and finding reliable information, 49 percent fell into groups that are relatively disengaged, unenthused, and wary about even seeking facts.

Compounding these difficulties, much of the U.S. public is not very good at identifying facts, according to a report from the center’s journalism and media project, “Distinguishing Between Factual and Opinion Statements in the News.” That survey asked more than 5,000 adults to try to identify five factual statements and five opinion statements. While a majority correctly identified at least three of the five statements in each set, that’s a result only slightly better than random guesses. Far fewer respondents got all five correct, and roughly a quarter got most or all wrong.

Lessons of history are helpful in putting the current misinformation crisis into context, Rainie says. He uses the example of the 15th century invention of the printing press to illustrate the unintended consequences of revolutionary changes in media and modes of communication. With the invention of movable type, he says, “the ‘fake-news’ folks of that day sprung to life in a dramatic way. People who practiced folklore and witchcraft and demonology and alchemy all had new ways to promote their stuff, to solicit testimonials, to speak to people in their own language, and they had a field day.” It took the advances of the Enlightenment and the scientific revolution to subdue the anti-truth forces over the course of time, he says.

Modern-day techniques to manipulate public opinion are increasingly sophisticated, particularly during breaking-news events when search algorithms and social media platforms enable instantaneous metastasizing of misstatements. Rainie cites the example of the 2017 Las Vegas Strip hotel shooting that killed 58 people and wounded 422. Phony stories and headlines blaming “crisis actors,” Democrats, ISIS, and anti-fascist groups were quickly disseminated to millions of users.

“A key tactic of the new anti-truthers is not so much to get people to believe in false information,” Rainie says, “it’s to create enough doubt that people will give up trying to find the truth, and distrust the institutions trying to give them the truth.” He credits Stanford University history of technology professor Robert Proctor with naming the concept: “agnotology” (combining the Greek agnos or “not knowing,” with logy, “the science of”), which describes intentionally induced doubt and ignorance, through which people who try to learn more about a subject only become more uncertain and distrustful.

Other organizations and foundations are exploring the impact of internet misinformation. Among the more ambitious, Rand Corp. undertook in 2017 an ongoing study it calls “Truth Decay: An Initial Exploration of the Diminishing Role of Facts and Analysis in American Public Life.” The Rand study said that “truth decay” was nothing new, noting three eras in American history in which economic, political, and psychological factors diverted public discussion from reliance on facts: the Gilded Age of the 1880s and 1890s, the Roaring ’20s through the 1930s, and the Vietnam War era of the late 1960s and early 1970s.

But Rand’s study concluded that distrust of institutions has become more severe in the current era and that the sharp disagreement on the basic facts important to society has reached an unprecedented level.

Pew’s Lee Rainie says that much of the hope of the experts is that society can develop a “cyborg future of truth” in which human-machine combinations take advantage of artificial intelligence mechanisms to expose fake news, combat doubtmongers, and restore public confidence in facts. But, he notes, technology alone can’t solve the problem. “One thing the experts also urge is teaching a new set of literacies,” Rainie says, “that is anchored in being good, smart, technologically enabled citizens.”

The News Literacy Project, a nonpartisan, independent nonprofit that teaches middle and high school students how to know what to believe in the digital age, is a step in that direction. The project has seen explosive growth in the past two years, doubling its staff to 18 and its budget to $3.6 million, thanks to funders who share the organization’s sense of urgency, says Alan Miller, a Pulitzer Prize-winning former Los Angeles Times reporter who founded the project in 2006.

The group created a virtual classroom it calls Checkology with a broad digital curriculum led by journalist-educators who teach students the importance of the First Amendment and how to distinguish between news, opinion, advertising, propaganda, and falsehood. In just two years, 16,000 teachers have used Checkology with more than 115,000 students in every state and overseas, Miller says, and assessment data show the vast majority of students report becoming more interested in news, more skeptical, more confident in their judgments, and more eager to become civically involved and vote. Fast Company magazine called the effort “one of the most important educational tools of our time.”

Miller sees hope in an increasing number of school districts and states that are restoring mandates to teach civics and are creating new programs in media literacy. “We’re no panacea,” he says, “but we’re hopeful about the potential to combat what is the equivalent of a public health epidemic; misinformation is damaging democracy and is dangerous to the health of its citizens.”

As efforts like the News Literacy Project expand their missions, the Pew Research Center will continue to study the future of truth in the digital era. With even the top experts so sharply divided on whether truth will survive and be discernable, the coming years will be a critical time for information in this information age.

“For some people, there is some comfort to be had in the 50-50 verdict of the experts because it means the story is still unfolding,” Rainie says. “And to these experts that means that with proper attention now and elevated conversations, we may be able to shape the future in a way that facts remain knowable—and respected.”

Peter Perl spent more than three decades as a reporter and editor at The Washington Post.