Mississippi’s 7 Elements of Quality Program Design

How one state incorporates rigorous evidence of effectiveness into budgeting decisions

Overview

Since Mississippi joined the Pew-MacArthur Results First Initiative in December 2012, the state has demonstrated its commitment to use rigorous research on “what works” to inform its policy and budget decisions. As part of this effort, the state recently established a process to ensure that any new funding requests submitted as part of the annual budget process are backed by strong evidence demonstrating a program’s effectiveness.

The process relies on a screening tool called the Seven Elements of Quality Program Design. This checklist helps determine whether funding requests for new programs, or new activities proposed as part of existing programs, are supported by research that demonstrates their effectiveness. Each part of the checklist contains multiple questions that must be answered thoroughly to achieve a positive recommendation from legislative staff, including questions on the programs’ premise, evidence of effectiveness, and plan for monitoring implementation and measuring outcomes. (A description of the seven elements follows.) The tool was created by Mississippi state Representative Toby Barker (R) and state Senator Terry Burton (R) in consultation with the staff of the state’s Joint Legislative Committee on Performance Evaluation and Expenditure Review (PEER committee).

“We think that the questions associated with the seven elements are a great way for legislators involved in the appropriations process to direct limited funds to intervention programs that rigorous research shows to be effective in achieving desired outcomes,” said Linda Triplett, director of PEER’s Performance Accountability Office. “By carefully examining the research evidence supporting new funding requests, the Legislature can shift resources away from programs with no evidence of effectiveness to programs with proven positive impact. Legislators can then monitor the performance of evidence-based programs to ensure that they are achieving the results reported in the research literature.”

How the process works

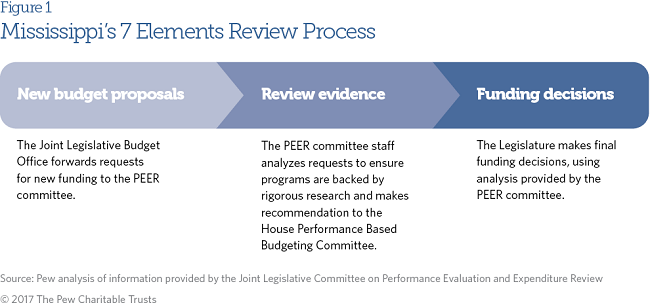

The seven elements were first included as requirements in the Joint Legislative Budget Office’s instructions for the fiscal year ending June 30, 2016.1 The process works like this: After budget proposals are submitted to the Budget Office, the requests for new funding are sent to staff of the PEER committee. Analysts from PEER, who are trained in research methods and program evaluation, review the requests using the screening tool and provide recommendations to the Legislature, which makes the final funding determination.

Initial results

The first year provided promising benefits to legislative and executive branch leaders. The process has added more rigor to the state’s performance-based budgeting process by creating a clear standard for evaluating new funding requests. The information has proved particularly useful for legislators who were often confronted with making funding recommendations with limited information on program effectiveness. The process has also challenged agencies to carefully consider the new programs they propose to ensure that they are supported by strong evidence and include a plan for monitoring implementation.

During the first year, no program met all of the criteria, primarily because PEER staff determined that the supporting research provided in the program requests did not meet the standards for rigorous evidence as defined by Mississippi Code 27-103-159.2 However, some programs—whose funding requests lacked rigorous research but were otherwise considered promising—were approved to create a one-year pilot program. During that time, they must conduct research on the program’s effectiveness. Programs will be considered for continued funding the following year, depending upon the evaluation results. In cases in which outcomes require additional time to be measured, researchers will present interim data.

This approach—allowing agencies and program vendors to conduct research on pilot programs—is part of an effort to encourage internal capacity building, which the state believes is critical for effective evidence-based policymaking. “One of the things that stands in the way of evidence-based government is capacity,” said Kirby Arinder, research methodologist for the PEER committee. “If the agencies can be shown how to use rigorous research to select programs or modify existing programs, they can better incorporate it into their work.”

Key challenges

Mississippi encountered several challenges in implementing the new requirements for screening budget requests. Although the seven elements were designed to be applied to new intervention programs—in areas that traditionally have been rigorously evaluated and have a strong evidence base—the state received some requests for new programs that were not interventions and that were in policy areas where strong research on effectiveness is lacking. For example, one request sought to fund the training of a new cohort of state troopers, which is not an intervention program. Many requests for new program funding also came in during the legislative session from vendors outside the formal budget process, restricting the time available for PEER committee staff to closely analyze each request.

Triplett said these and other issues will be resolved as agencies become more familiar with the purpose of the seven elements and the type of evidence that should be provided, and as outside vendors come to understand the requirements and time frame for submitting requests. “The first year was a new process for everyone, and we are seeking to target the initiative better in future years,” said Triplett. To support agencies with their requests, PEER staff members regularly refer them to the Results First Clearinghouse Database for examples of intervention programs with high-quality research documenting their effectiveness.

Moving forward

As the seven elements are better understood statewide, Mississippi leaders are optimistic that this tool will be an important aspect of the state’s overall shift toward evidence-based policymaking and performance-based budgeting. Triplett believes that state agencies will learn the process as they create inventories of their programs in compliance with Mississippi Code Section 27-103-159 (2014), an undertaking with which the PEER committee staff is assisting. The legislation also requires agencies to establish a baselining procedure for evaluating programs that don’t meet the standards of “evidence-based” or “research-based” and a method for conducting cost-benefit analyses of all programs. “We can see that they are learning about what constitutes an evidence-based program through the inventory process,” said Triplett.

Mississippi’s 7 Elements of Quality Program Design

All Mississippi agencies making requests for new program funding are required to address the following questions:3

1. Program premise.

- What public problem is this program seeking to address? How will this program address the problem? What other state entities are involved in addressing this problem, and how does your proposed program differ from the others already in place?

- Does your proposed program effort link to a goal or benchmark identified in Mississippi’s statewide strategic plan? Explain where this program fits into your agency’s strategic plan.

2. Needs assessment.

- What is the statewide extent of the problem stated in numerical and geographic terms? What portion of the total need identified does this program seek to address?

3. Program description.

- What specific activities will you carry out to achieve each of your expected program outcomes? Over the period for which you are requesting funding, how many of each of these activities do you intend to provide and in which geographic locations?

- How many individuals do you intend to serve? Once the program is fully operational, what are the estimated ongoing annual costs of operations? What is the estimated cost per unit of activity? List each expected benefit of this program per unit of activity provided. If known, include each benefit’s monetized value as well as a detailed explanation of the calculations and assumptions used to monetize the value of each benefit.

- What is the expected benefit-to-cost ratio for this program (that is, the total monetized benefits divided by total costs)?

4. Research and evidence filter.

- As defined in Mississippi Code Section 27-103-159 (1972), specify whether your program is evidence-based, research-based, a promising practice, or none of the above. Attach copies of or online links to the relevant research supporting your answer.

- If there is no existing research supporting this program, describe in detail how you will evaluate your pilot program with sufficient rigor to add to the research base of evidence-based or research-based programs.

5. Implementation plan.

- Describe all startup activities needed to implement the program and the cost associated with each activity or the existing resources that you will use to carry out the activity. Provide a timeline showing when each startup activity will take place and the date you expect the program to be fully operational.

6. Fidelity plan.

- Provide a copy of your plan for ensuring that your program will be implemented with fidelity to the research-based program design. Your plan should include a checklist of the program components identified in the supporting research literature that are necessary to achieve the reported impacts.

- If there is no existing research base for this program, explain the key components critical to the success of your pilot program and how you will ensure that these components are implemented in accordance with program design.

7. Measurement and evaluation.

- What specific outcomes do you expect to achieve with this program? Each targeted outcome must be stated in measurable terms that include how the outcome is calculated as well as the targeted direction and percentage change in the outcome to be achieved by a specified date. Explain how you arrived at the expected rate of change by the target date. For each outcome measure, report the most recent data available at the time of your request and the reporting period for the data.

- How often will you measure and evaluate this program? What specific performance measures will you report to the Legislature? At a minimum, you should include measures of program outputs, outcomes, and efficiency.

Endnotes

- Mississippi Joint Legislative Budget Committee, “Budget Instructions/Forms” (June 1, 2016), 13–15, http://www.lbo.ms.gov/pdfs/obrsforms/2018_budget_instructions.pdf.

- Mississippi Code Section 27-103-159 (2014) (http://law.justia.com/codes/mississippi/2014/title-27/chapter-103/mississippi-performance-budget-and-strategic-planning-act-of-1994/section-27-103-159) defines the research necessary for a program to be labeled evidence-based, research-based, and promising; and requires state agencies to create inventories of their programs and activities, and then to categorize each based on the evidence supporting its effectiveness.

- A summary of the seven elements was provided by Linda Triplett, director of PEER’s Performance Accountability Office. For the complete text of the questions associated with the seven elements, see Mississippi Joint Legislative Budget Committee, “Budget Instructions/Forms.”