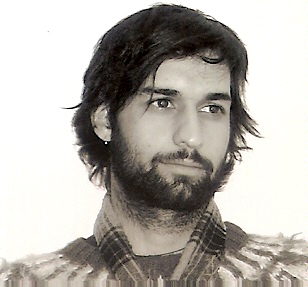

Ezequiel M. Arneodo, Ph.D.

- Title

- Assistant Investigator

- Department

- The Institute of Physics La Plata (IFLP)

- Institution

- Consejo Nacional de Investigaciones Cientificas y Tecnicas (CONICET)

- Address

- Diagonal 113 entre 63 y 64

- City, ZIP

- La Plata, Buenos Aires, 1900

- Country

- Argentina

- [email protected]

- Website

- https://www.conicet.gov.ar/new_scp/detalle.php?id=31101&datos_academicos=yes

- Research field

- Neuroscience

- Award year

- 2014

- Mentor name

- Dmitry Rinberg, Ph.D.

Research

How does the brain transform intention into action? Brain-machine interfaces (BMIs), which couple neural responses to external artificial effectors, seek to answer this question by directly inferring intention from neural activity. Yet this decoding problem is computationally intensive even for the simplest actions; as the complexity of the intended behavior increases, computations become intractable. As a result, effective neural prostheses for speech and other natural behaviors remain science fiction.

I have the goal of turning this fiction into reality by combining recent advances in machine learning, biophysical modeling of vocal production mechanisms, neuromorphic engineering, and systems neuroscience. Using birdsong, the pre-eminent neurobiological model for natural (i.e., complex), learned vocal motor control, I plan to synthesize song directly from activity in a bird’s brain. My plan develops two solutions. First, I reduce the computational complexity of the motor-mapping problem. Instead of trying to solve the very difficult task of mapping neural activity to complex muscle movements, I will replace the peripheral vocal organ with a biophysical model whose low-dimensional dynamics capture the whole complexity of the motor output. Second, I will implement machine learning algorithms on a low-power, neuromorphic hardware platform for real-time computation. This novel marriage of biophysics, neuromorphic engineering, and systems neuroscience will produce a powerful tool for testing hypotheses of how complex behaviors, such as speech, are represented and transformed in premotor brain regions. Insights gained through these efforts could enable a new generation of biomimetic systems.

Fellow Keywords

2014 Search Pew Fellows

- Matias A. Alvarez-Saavedra, Ph.D.

- Ezequiel M. Arneodo, Ph.D.

- Andrea M. Caricilli, Ph.D.

- Luisina De Tullio, Ph.D.

- Armando Hernandez Garcia, Ph.D.

- Pablo A. Lara-Gonzalez, Ph.D.

- Juan David Ramirez Gonzalez, Ph.D.

- Daniela Paula T. Thomazella, Ph.D.

- Alejandro Vasquez Rifo, Ph.D.

- Yuriria Vázquez Zúñiga, Ph.D.